Getting started with Generative AI

- Juan Diaz

- 24 Apr, 2025

- 04 Mins de lectura

- Databricks

Generative AI refers to a category of artificial intelligence that can generate text, images, code, audio, and other content formats based on input data or prompts. It leverages powerful machine learning models known as foundation models, which are pre-trained on massive datasets and can be fine-tuned for a wide range of specific tasks.

One of the most prominent types of foundation models are large language models (LLMs). These models are capable of understanding and generating human-like language. Tools like ChatGPT, Claude, and models from Hugging Face or Meta are examples of LLMs.

In the context of Databricks, generative AI is used to accelerate data tasks, improve user interaction through natural language, and create intelligent assistants or applications. This blog introduces foundational concepts and essential techniques for building with generative AI on Databricks.

Illustration representing Generative AI capabilities — Image by Seanprai S. on Vecteezy

Prompt Engineering Primer

Basics

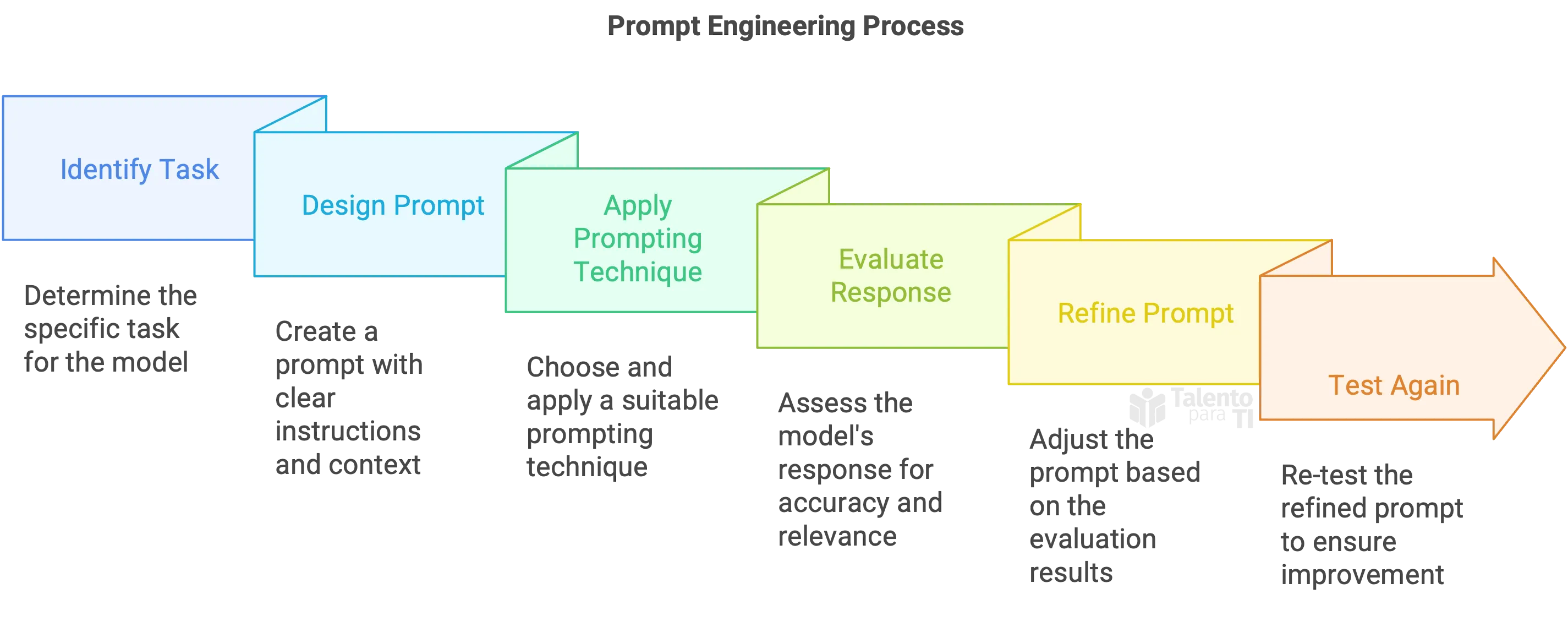

To start, let’s cover the basics of prompt engineering. Prompt engineering refers to the process of designing and refining prompts to optimize the response from a language model (LM). It’s all about crafting prompts that guide the model toward producing relevant and accurate responses.

- Prompt: The input or query given to the language model to elicit a response.

- Prompt engineering: The practice of designing and refining prompts to optimize the response from the model.

Prompt Components

Here are the main components of a prompt:

- Instruction: The task or question you want the model to address.

- Context: Additional information or background to help the model better understand the task.

- Input: The specific data or examples given to the model to assist in generating the response.

- Output: The expected result or response generated by the model.

Prompt Engineering Techniques

Now, let’s explore several techniques for prompt engineering that can help improve model performance. In this blog, we’ll cover some of the most common techniques you can start using.

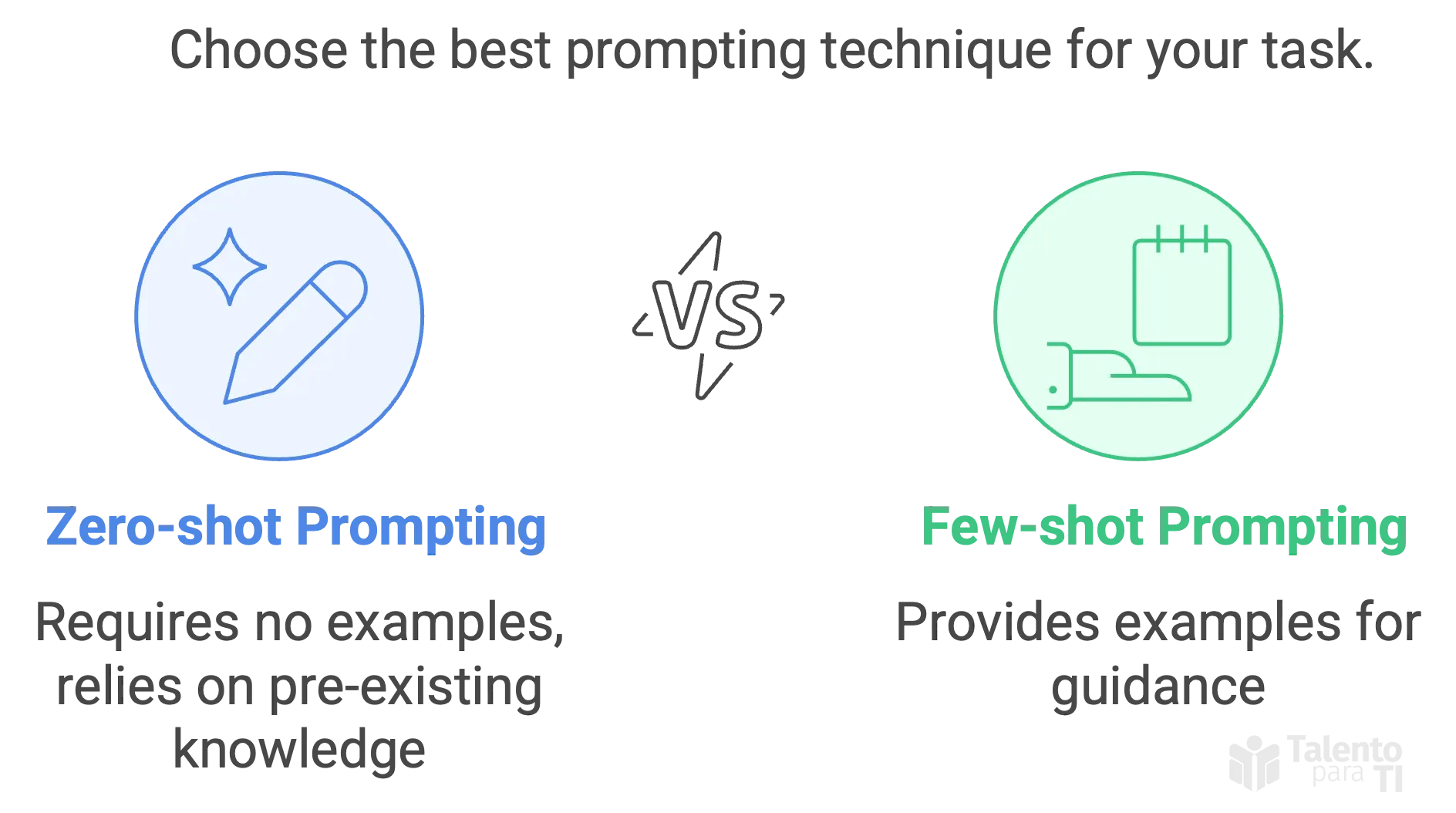

Zero-shot / Few-shot Prompting

-

Zero-shot prompting: You give the model a task with no examples. The model relies on its pre-existing knowledge to generate a response. Here is a simple prompt example:

Translate the following English sentence to Spanish: ‘Hello, how are you?’

Write a Databricks SQL query that analyzes customer data.

-

Few-shot prompting: You provide the model with a few examples of the task you want it to perform. Here is a prompt example: Here’s a simpler few-shot prompting example related to Databricks:

Write a Databricks SQL query. Here are some examples:

- A query that counts daily active users from the events table.

- A query that calculates average purchase value by customer segment.

- A query that identifies the top performing products by region.

Prompt Chaining

Prompting chaining involves breaking a complex task into smaller, manageable steps. This helps the model focus on each step and generate more accurate responses.

First Example:

You are a Data Engineer expert. Write a summary for my blog about how to use Databricks Community Edition.

Great! Now write an introduction based on this summary.

Excellent. Now expand this into a section titled “Why use Databricks Community Edition?”,

And continue with separate prompts for each subsequent section.

Second Example: (Break a complex task into smaller steps)

If you don’t know the answer, say you don’t know. > Avoid guessing. Be factual.

Now write an intro based on that summary.

Expand this into a section titled “Why use Databricks Community Edition?

Chain-of-Thought Prompting

Similar to prompting chaining, chain-of-thought prompting asks the model to explain its reasoning or thought process at each step. This improves the quality and accuracy of the responses. If you want the model to solve a math problem, you might ask it to explain its reasoning at each step. For example:

If Susan is 5 years older than John, and John is 10 years old, how old is Susan? Let’s think step by step to solve this problem.

Ask the model to reason step-by-step:

I want to create a Delta Live Table pipeline in Databricks. Let’s think step by step.

Prompt Engineering Tips and Tricks

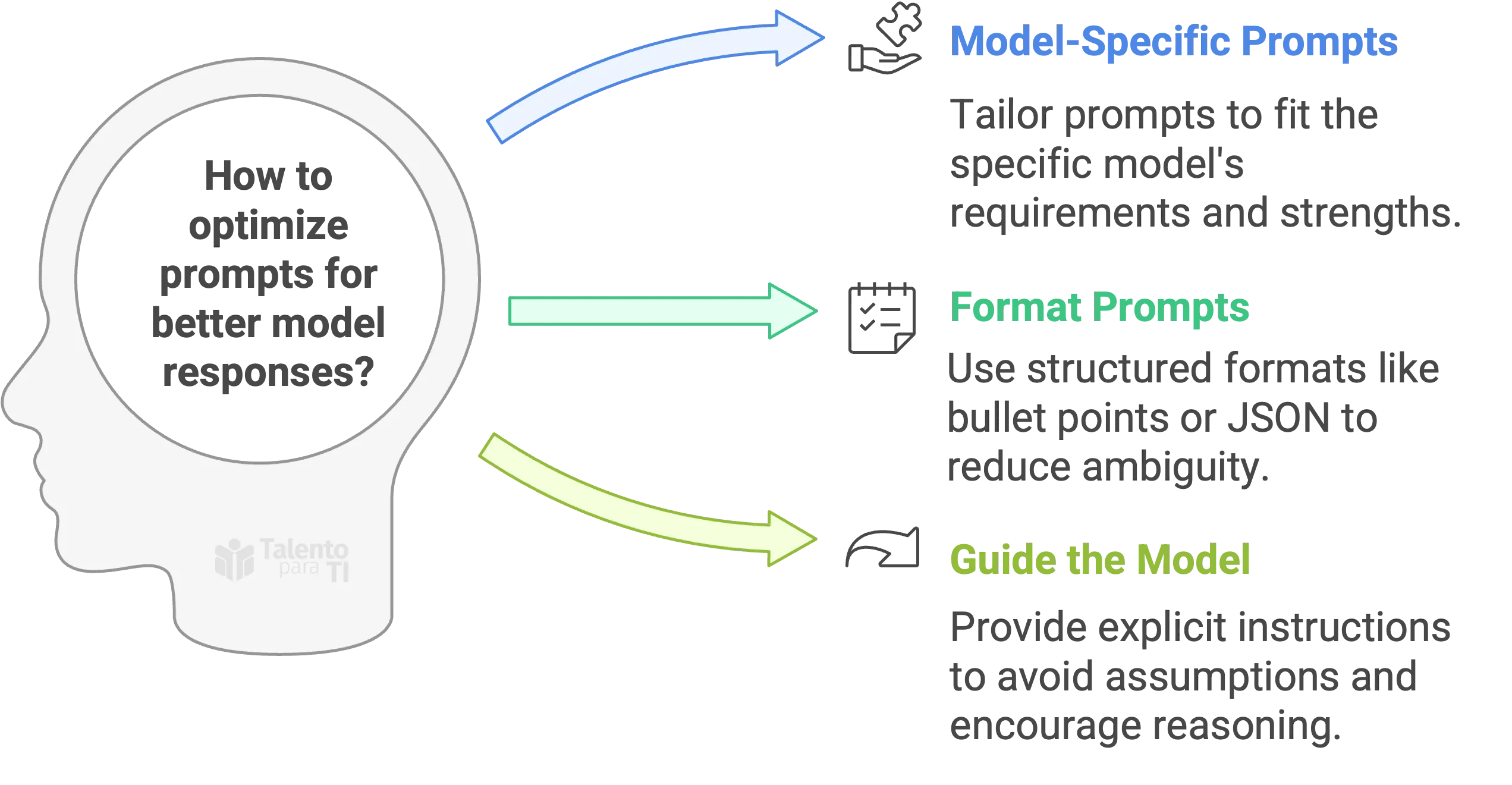

Prompts are model-specific

Different models may respond differently to the same prompt. It’s important to experiment with different prompts and techniques to find what works best for your specific use case.

- Different models may require different prompt formats.

- Different use cases may benefit from different prompt styles.

- Each model has its own strengths and weaknesses.

- Iterative development is key—test, tweak, and test again.

- Be aware of bias and hallucinations in the model’s responses.

Format prompts helps

Using a specific format for your prompts can help the model better understand the task and generate more accurate responses. For example:

- Use bullet points, numbered lists, or clear delimiters.

- Request structured output formats like: HTML, JSON or Markdown

Formatting both the prompt and expected response helps reduce ambiguity.

Guide the model for better responses

You can improve response quality by explicitly guiding the model:

If you don’t know the answer, say you don’t know.

Avoid guessing. Be factual.

Provide a concise answer in 2–3 sentences. Condense this explanation.

Do not assume gender, age, or intent. Avoid asking for personal data like SSNs or phone numbers.

Think step by step.

Ask me questions one at a time and wait for my response.

Guide the model for better responses

Guide the model by specifying requirements, such as asking it to avoid assumptions or to be concise.

Benefits and Limitations of Prompt Engineering

Benefits

- Simple and efficient.

- Predictable results.

- Tailored output.

Limitations

- The output depends on the model used.

- Limited by pre-trained knowledge.

- May require multiple iterations.

- May require domain-specific knowledge.