MLflow 3.0 for GenAI: Traceability, Evaluation, and Scalability in One Platform

MLflow is an open-source platform designed to manage the entire lifecycle of machine learning models. Since its launch in 2018, it has evolved into a standard in MLOps, helping data science and machine learning teams log experiments, version models, and deploy them reproducibly. However, the arrival of generative artificial intelligence (GenAI) has changed the game: systems now not only predict, but generate text, code, and content, introducing new challenges. Organizations need to measure the quality of generated responses, version prompts and models consistently, audit interactions, and ensure regulatory compliance—something traditional MLOps approaches do not fully cover.

MLflow 3.0 emerges as the answer to these challenges. It is not just an update, but an evolution that turns MLflow into a unified framework for experimentation, observability, quality evaluation, and governance of GenAI applications. Natively integrated into Databricks, this version provides complete traceability of every run, consistent metrics across environments, and automated evaluation tools to detect issues of relevance, correctness, safety, or hallucinations. With MLflow 3.0, organizations can move from isolated prototypes to reliable, scalable, and auditable production solutions, bringing GenAI to the level of rigor demanded by enterprise environments.

What is MLflow 3.0 for GenAI?

MLflow 3.0 is the latest version of the open-source MLOps platform, optimized for the unique challenges of GenAI. Unlike previous versions, MLflow 3.0 introduces:

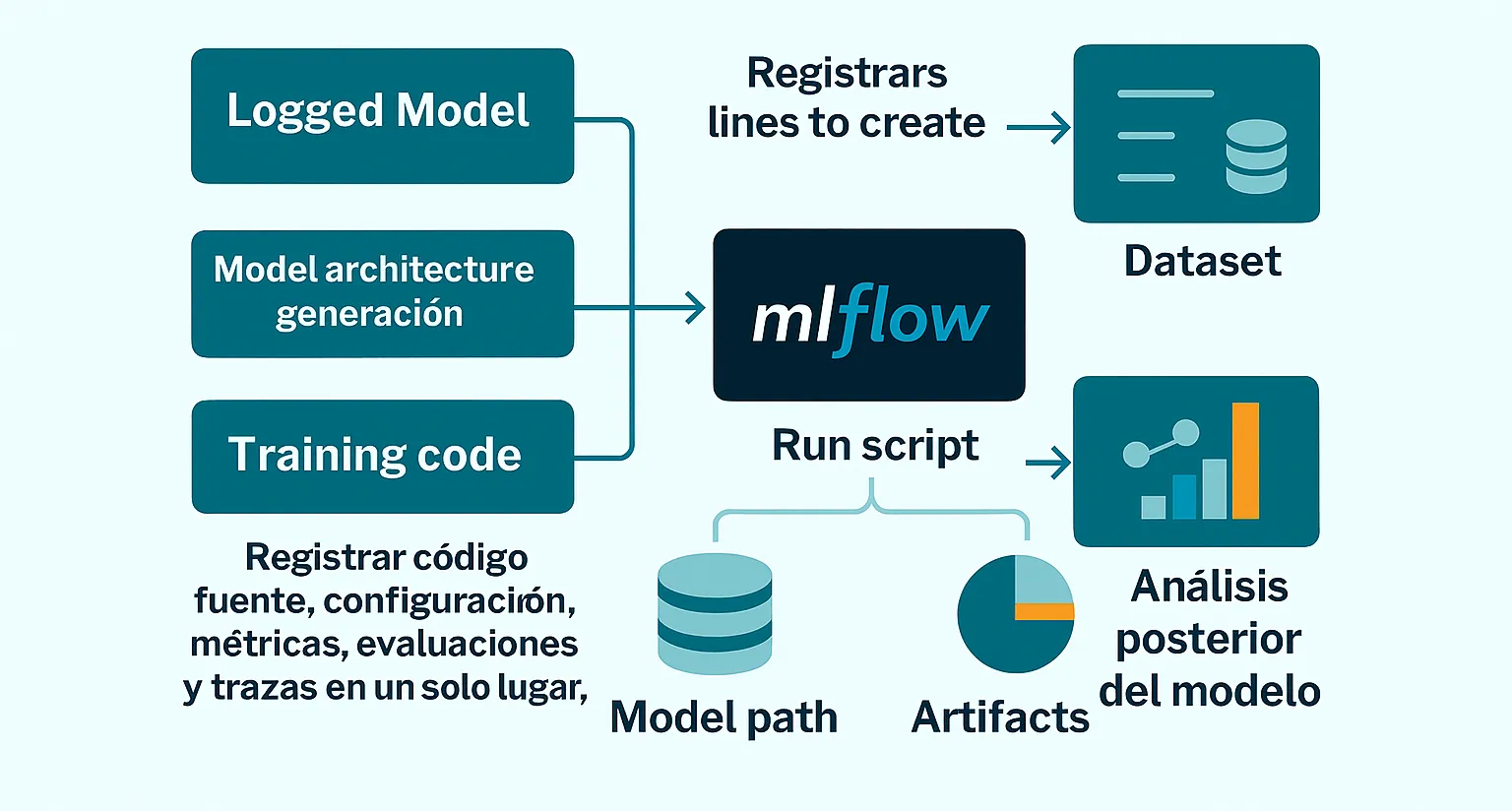

- LoggedModel: a new concept that links each model to its source code (commits), configuration, metrics, evaluations, and traces.

- Comprehensive observability: complete tracking of prompts, responses, latencies, tokens, and costs with a single line of code.

- Automated quality evaluation: built-in and customizable evaluators to measure relevance, correctness, safety, and hallucinations.

- Enterprise governance: version control, Unity Catalog integration, and regulatory compliance.

Key Advantages

| Advantage | Description |

|---|---|

| Unified platform | From development to production monitoring, everything happens in one place. |

| Total flexibility | Compatible with any LLM and frameworks like OpenAI, LangChain, LlamaIndex, DSPy, and Anthropic. |

| Consistent metrics | What is measured in development continues to be measured in production, ensuring comparability. |

| Integrated human feedback | Turns real interactions into test cases to improve the model. |

| Prompt logging and versioning | Systematically test, compare, and optimize prompts. |

| Security and compliance | Unified governance with Databricks Unity Catalog, access control, and auditing. |

Automatic Traceability Demonstration in MLflow 3.0

A typical workflow with MLflow 3.0 does not require rewriting your application. You just need to enable automatic traceability before running your code:

import mlflow

mlflow.autolog() # Enable automatic traceability

# Your GenAI code remains the same

response = client.chat.completions.create(

model="gpt-4",

messages=[{"role": "user", "content": "Summarize the quarterly report."}]

)

print(response.choices[0].message)What matters here is what happens behind the scenes: with mlflow.autolog(), every interaction with the model is centrally logged. This includes the original prompt, the number of tokens used, response latency, and estimated operation cost. Thanks to this, you don’t need to write extra code to capture metrics or manually create tracking tables. Later, in Databricks or your MLflow instance, you can visualize all traces, filter by experiments, compare results, and detect optimization opportunities (e.g., overly long prompts or models with higher cost per token).

Automated Evaluation of GenAI Applications

Quality evaluation is one of the pillars of MLflow 3. This version introduces a comprehensive evaluation system that allows you to objectively, reproducibly, and continuously measure the performance of GenAI applications in any environment.

Detection of unsafe content.

Hallucinations

Measures response fidelity.

Relevance and correctness

Checks usefulness and accuracy.

Custom judges

Adapt evaluation to your business needs.

info

This replaces costly manual tests with reproducible and consistent evaluations.

Migration and Technical Considerations

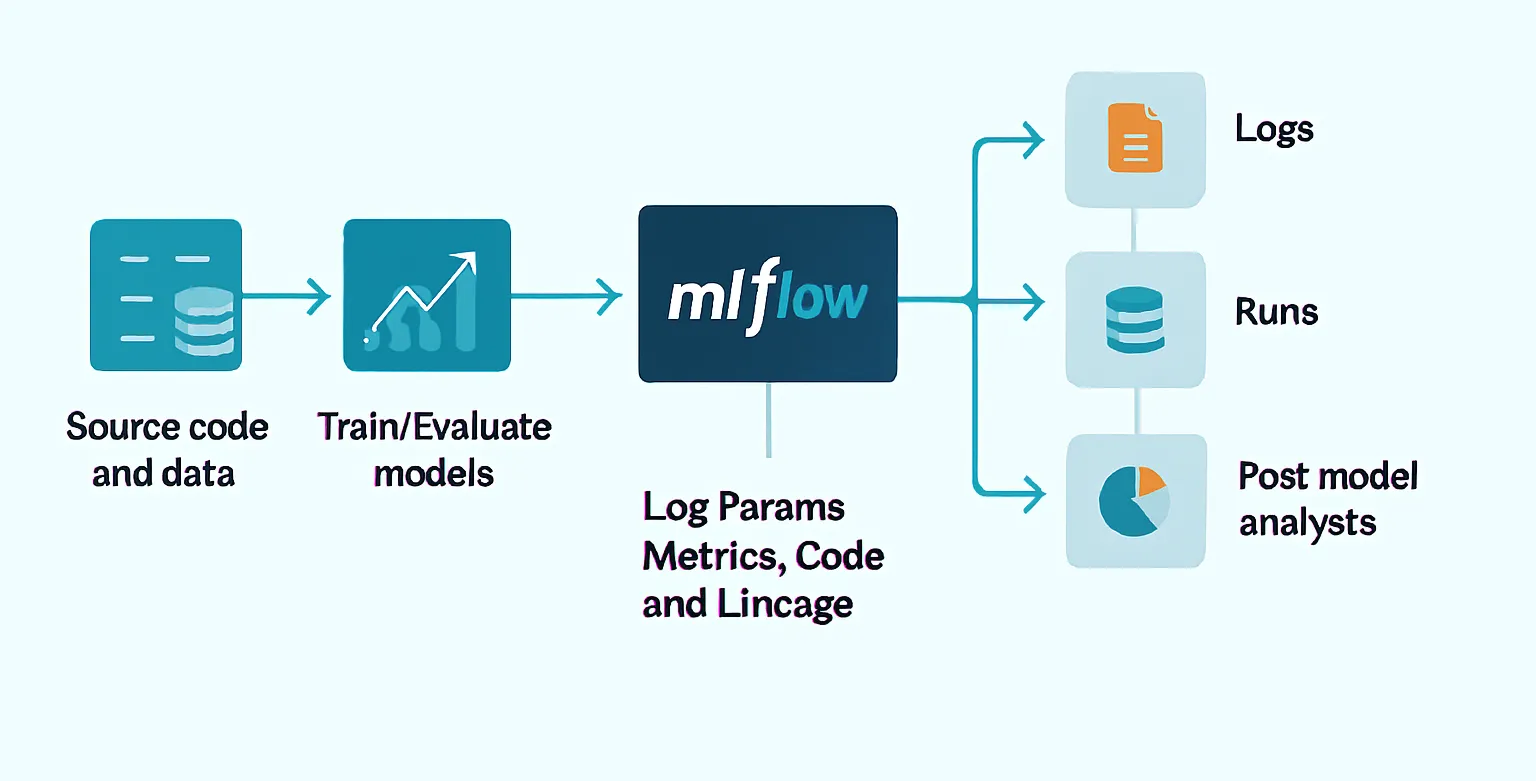

MLflow 3.0 is not just an update: it’s a new way of working with GenAI models. The LoggedModel concept centralizes everything—code, configuration, metrics, evaluations, and traces—in one place, making auditing and reproducibility easier.

Additionally, with mlflow.autolog(), key information such as prompts, responses, latencies, and costs is automatically logged, both in development and production. This simplifies instrumentation and improves comparability across environments.

The diagram shows how these components integrate into a more structured flow, connecting code, data, and subsequent model analysis.

Featured Use Cases

Continuous optimization of conversational agents

Monitor prompts and responses in production to iteratively adjust and improve chatbot performance.

Regulatory compliance and auditing

Version models, metrics, and traces to meet regulations (HIPAA, ISO) and facilitate audits.

Production degradation detection

Identify drops in performance and response quality, allowing retraining before impacting the business.

Training with human feedback

Turn real interactions into test cases and training data for continuous improvement.

Conclusion

MLflow 3.0 redefines how GenAI applications are managed in professional environments. By integrating complete traceability, automated evaluation, and version control, it provides a solid foundation to scale with confidence, audit precisely, and continuously improve.

Adopting it is not just a technical decision: it’s a strategic bet on quality, security, and operational sustainability throughout the lifecycle of your generative models.

Resources

note

Are you interested in learning more and boosting your GenAI projects? Check out the official documentation, the Databricks blog, and the GitHub repository to discover everything MLflow 3.0 has to offer.